Observability is no longer a buzzword but an essential requirement for data-driven companies!

In the last decade, the emergence of cloud computing and microservices has made applications more complex, distributed, and dynamic. In fact, over 90% of large enterprises have adopted a multi-cloud infrastructure.

While the shift to scalable systems has benefited businesses, monitoring and managing them has become very challenging.

To make matters worse, companies are understanding that the tools they previously relied aren’t fit for the job! Legacy monitoring systems lack visibility, create siloed environments, and hinder process management and automation efforts.

It is no surprise that DevOps and SRE teams are turning to observability to understand system behavior, facilitate troubleshooting, and ensure performance enhancements. Recent reports even predict that the observability market size could reach USD 4.1 billion by 2028 from its value of USD 2.4 billion in 2023—a CAGR of 11.7%.

In this article, we delve into the essence of observability—defining the term while understanding its importance, challenges, and benefits.

What is observability?

In simple words, observability is the ability to assess a system’s current state based on the data it produces. It provides a comprehensive understanding of a distributed system by looking at all the available information.

The term “observability” stems from control theory, wherein engineers can figure out a system’s internal state from external outputs. This is perhaps the reason why most confuse observability and monitoring – which are related yet different concepts.

Observability offers:

- A holistic view of IT systems in real-time.

- Actionable insights.

- Tracing.

- Root cause analysis.

- Metrics collection and analysis.

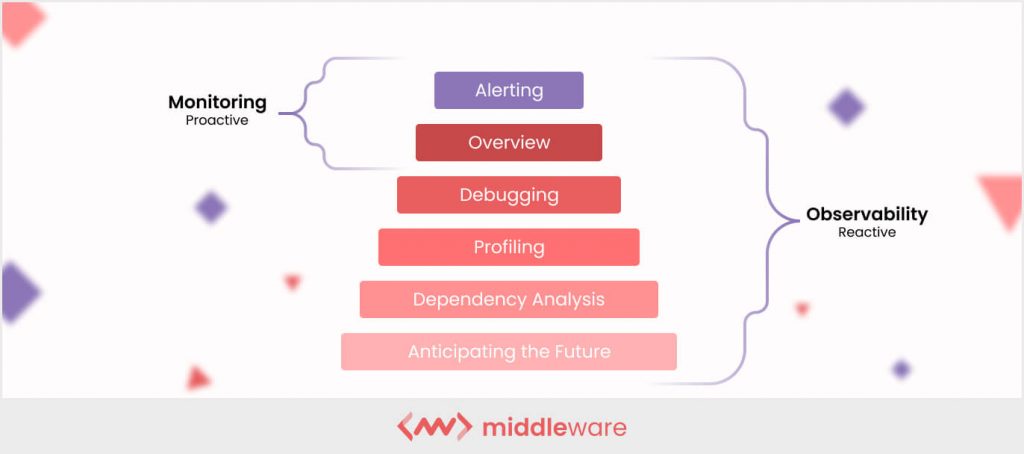

Observability vs. Monitoring

Cloud monitoring solutions employ dashboards to exhibit performance indicators for IT teams to identify and resolve issues. However, they merely point out performance issues.

As such, monitoring tools must get better at overseeing complex cloud-native applications and containerized setups that are prone to security threats.

In contrast, observability uses logs, traces, and metrics across your infrastructure. Such platforms provide useful information about the system’s health at the first signs of an error, alerting DevOps engineers about potential problems before they become serious.

Observability grants access to data encompassing system speed, connectivity, downtime, bottlenecks, and more. This equips teams to curtail response times and ensure optimal system performance.

As per recent reports, nearly 64% of organizations using observability tools have experienced mean time to resolve (MTTR) improvements of 25% or more.

Read more about Observability vs. Monitoring.

How does observability work?

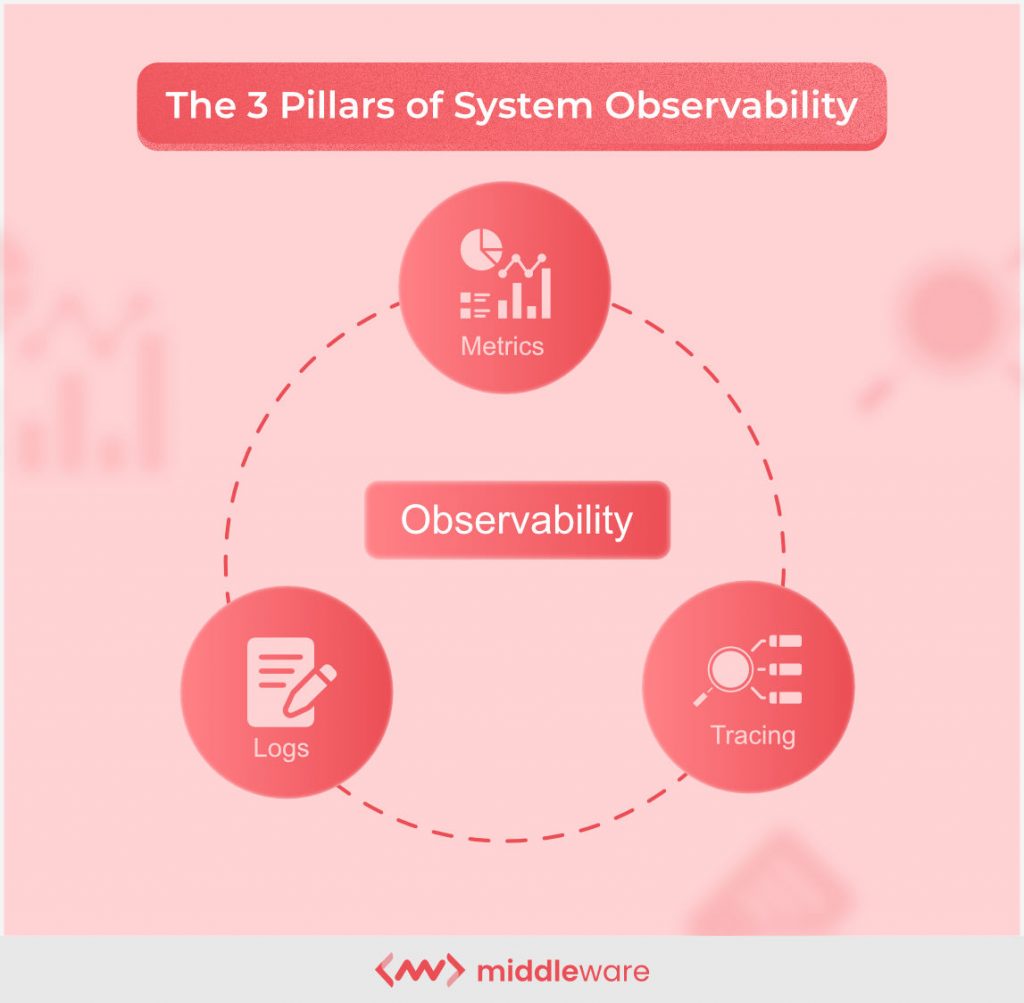

Observability operates on three pillars: logs, metrics, and traces. By collecting and analyzing these elements, you can bridge the gap between understanding ‘what’ is happening and ‘why’ it’s happening.

With this insight, teams can quickly spot and resolve problems in real-time. While methods may differ across platforms, these telemetry data points remain constant.

Logs

Logs are records of each individual event that happens within an application during a particular period, with a timestamp to indicate when the event occurred. They help reveal unusual behaviors of components in a microservices architecture.

- Plain text: Common and unstructured.

- Structured: Formatted in JSON.

- Binary: Used for replication, recovery, and system journaling.

Cloud-native components emit these log types, leading to potential noise. Observability transforms this data into actionable information.

Metrics

Metrics are numerical values describing service or component behavior over time. They include time-stamps, names, and values, providing easy query ability and storage optimization.

Metrics offer a comprehensive overview of system health and performance across your infrastructure.

However, metrics have limitations. Though they indicate breaches, they do not shed light on underlying causes.

Traces

Traces complement logs and metrics by tracing a request’s lifecycle in a distributed system.

They help analyze request flows and operations encoded with microservices data, identify services causing issues, ensure quick resolutions, and suggest areas for improvement.

Unified observability

Successful observability stems from integrating logs, metrics, and traces into a holistic solution. Rather than employing separate tools, unifying these pillars helps developers gain a better understanding of issues and their root causes.

As per recent studies, companies with unified telemetry data can expect a faster Mean time to detect (MTTD) and MTTR and fewer high-business-impact outages than those with siloed data.

Benefits of observability

According to the Observability Forecast 2023, organizations are reaping a wide range of benefits from observability practices:

- Improved system uptime and reliability.

- Increased operational efficiency.

- Enhanced security vulnerability management.

- Improved real-user experience.

- Increased developer productivity.

These were just the tip of the iceberg. Companies using full-stack observability have seen several other advantages:

- Faster incident resolution.

- Reduced downtime costs.

- Faster detection and resolution of high-impact outages.

- More unified telemetry data.

- Aligned observability with core business goals.

Additionally, companies with full-stack observability or mature observability practices have gained high ROIs. As of 2023, the median annual ROI for observability stands at 100%, with an average return of $500,000.

Is observability important?

Previously, simpler systems were easier to manage and monitor, using basic metrics like CPU, memory, and network conditions. Simpler systems tend to exhibit predictable patterns when issues arise, making diagnosis pretty easy.

However, recent technology developments that are characterized by cloud-native microservices and Kubernetes clusters function within an open-source framework. Developed and deployed by distributed teams, these systems introduce a fresh set of challenges legacy systems never meant to solve.

Accelerated software deployment through DevOps and continuous delivery alongside systems with inherent failure points have made issue detection more complex. Server downtimes, cloud service disruptions, and new code impacting end-user experiences pile on.

In this case, modern problems demanded modern solutions – observability tools! With these robust systems, pinpointing the root cause of problems within distributed systems can become fairly doable.

As the shift towards microservices has decentralized responsibilities across teams and removed discrete app ownership, observability tools can help multiple teams understand, analyze, and troubleshoot various application areas.

In fact, 71% of organizations see observability as a key enabler to achieving core business objectives and reducing incident response time. So, yes, it is important!

How to maximize observability?

Observability is so much more data collection. Access to logs, metrics, and traces marks just the beginning. True observability comes alive when telemetry data improves end-user experience and business outcomes.

Open-source solutions like OpenTelemetry set standards for cloud-native application observability, providing a holistic understanding of application health across diverse environments.

Real-user monitoring offers real-time insight into user experiences by detailing request journeys, including interactions with various services. This monitoring, whether synthetic or recorded sessions, helps keep an eye on APIs, third-party services, browser errors, user demographics, and application performance.

With the ability to visualize system health and request journeys, IT, DevSecOps, and SRE teams can easily troubleshoot potential issues and recover from failures.

Throwing AI into the mix makes everything better!

AI can enhance observability by using telemetry data to improve end-user experiences and business outcomes. Blending AIOps and Observability can optimize real user monitoring and automate the analysis of vast data streams, allowing teams to maximize their overall efficiency to a great extent.

Observability best practices

There is no doubt that observability offers immense value. However, it’s important to understand that most available tools lack business context.

On top of that, several organizations look at technology and business as two separate disciplines, hindering their overall ability to maximize their use of observability. The situation highlights the need for a defined set of best practices.

- Unified telemetry data: Consolidate logs, metrics, and traces into centralized hubs for a comprehensive overview of system performance.

- Metrics relevance: Identify and monitor important metrics that are aligned with organizational goals.

- Alert configuration: Set benchmarks for those metrics and automate alerts to ensure quick issue identification and resolution.

- AI and machine learning: Leverage machine learning algorithms to detect anomalies and predict potential problems.

- Cross-functional collaboration: Foster collaboration among development, operational, and other business units to ensure transparency and overall performance.

- Continuous enhancement: Regularly assess and improve observability strategies to align with evolving business needs and emerging technologies.

Read more about observability best practices.

Finding the right observability tool

Selecting the right observability platform can be a tad bit difficult. You need to consider capabilities, data volume, transparency, and corporate goals, and cost.

Here are some points worth considering:

User-friendly interface

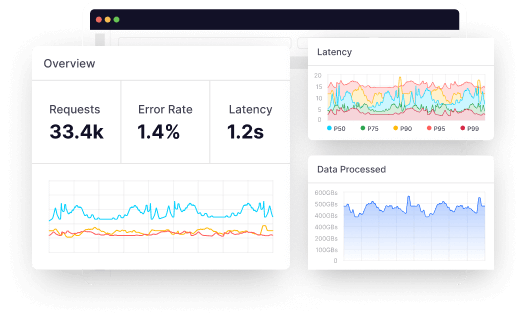

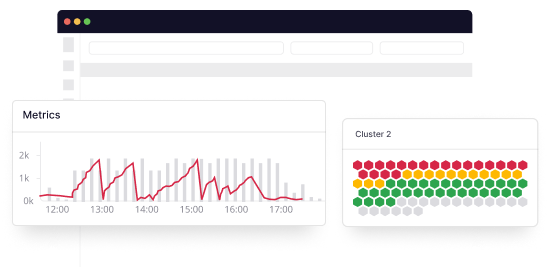

Dashboards present system health and errors, aiding comprehension at various system levels. A user-friendly solution is crucial to engage stakeholders and integrate smoothly into existing workflows.

Real-time data

Accessing real-time data is vital for effective decision-making, as outdated data complicates actions. Utilizing current event-handling methods and APIs ensures accurate insights.

Open-source compatibility

Prioritize observability tools using open-source agents like OpenTelemetry. These agents reduce resource consumption, enhance security, and simplify configuration compared to in-house solutions.

Easy deployment

Choose an observability platform that can quickly be deployed without stopping daily activities.

Integration

The tools must be compatible with your technology stack, including frameworks, languages, containers, and messaging systems.

Clear business value

Benchmark observability tools against key performance indicators (KPIs) such as deployment time, system stability, and customer satisfaction.

AI-powered capabilities

AI-driven observability helps reduce routine tasks, allowing engineers to focus on analysis and prediction.

Top 5 observability platforms

Once you have a clear idea of your organizational goals, observability platforms can be compared. Here are the leading five options:

Middleware

Middleware is a cloud-based observability platform that uses AI to break down data and insight barriers between containers. It can quickly identify the root causes of problems, detect both infrastructure and application issues in real-time, and provide solutions.

Furthermore, Middleware unites metrics, logs, and traces in a single dashboard to help solve problems quickly, reducing downtime and improving user experience.

Splunk

Splunk is an advanced analytics platform powered by machine learning for predictive real-time performance monitoring and IT management. It excels in event detection, response, and resolution.

Datadog

Datadog is designed to help IT, development, and operations teams gain insights from a variety of applications, tools, and services. This cloud monitoring solution provides useful information to companies of all sizes and sectors.

Dynatrace

Dynatrace provides both cloud-based and on-premises solutions with AI-assisted predictive alerts and self-learning APM. It is easy to use and offers various products that render monthly reports about application performance and service-level agreements.

Observe, Inc.

Observe is a SaaS tool that provides visibility into system performance. It provides a dashboard that displays the most important application issues and overall system health. It is highly scalable and uses open-source agents to quickly gather and process data, making the setup process simpler.

Observability challenges

Here’s an interesting question: if observability provides so many advantages, then what’s stopping organizations from going all in?

Cost: In 2023, nearly 80% of companies experienced pricing or billing issues with an observability vendor.

Data overload: The sheer volume, speed, and diversity of data and alerts can lead to valuable information surrounded by noise. This fosters alert fatigue and can increase costs.

Team segregation: Teams in infrastructure, development, operations, and business often work in silos. This can lead to communication gaps and prevent the flow information within the organization.

Causation clarity: Pinpointing actions, features, applications, and experiences that drive business impact is hard. Regardless of how great the observability platform is, companies still need to connect correlations to causations.

The future of observability

As 2023 comes to an end, the future of observability holds exciting possibilities!

In the days to come, the industry will see a major shift, moving away from legacy monitoring to practices that are built for digital environments.

Full-stack observability tops this list, with nearly 82% of companies gearing up to adopt 17 capabilities through 2026. The idea of tapping natural language and Large Language Models (LLMs) to build more user-friendly interfaces is also gaining steam.

Furthermore, industry players are upping the ante by tapping into AI to offer a unified systems of records, end-to-end visibility, and high scalability.

They promise to democratize observability, deliver real-time insights into operations, reduce downtime, improve user experiences, and ensure customer satisfaction.

Middleware is leading this change with its AI-powered observability solutions that can unify telemetry data into a single location and deliver actionable insights in real-time.

This helps organizations better manage multi-cloud environments and ensure seamless migrations. Such a comprehensive approach to observability can help companies make the most of multi-cloud infrastructures.

Schedule a free demo with one of our experts today!

FAQs

Observability entails gauging a system’s present condition through the data it generates, including logs, metrics, and traces. Observability involves deducing internal states by examining output over a defined period. This is achieved by leveraging telemetry data from instrumented endpoints and services within distributed systems.

Observability is essential because it provides greater control and complete visibility over complex distributed systems. Simple systems are easier to manage due to their fewer moving parts.

However, in complex distributed systems, monitoring is necessary for CPU, logs, traces, memory, databases, and networking conditions. This monitoring helps in understanding these systems and applying appropriate solutions to problems.

The 3 pillars of observability: Logs, metrics and traces.

- Logs: Logs provide essential insights into raw system information, helping to determine the occurrences within your database. An event log is a time-stamped, unalterable record of distinct events over a specific period.

- Metrics: Metrics are numerical representations of data that can reveal the overall behavior of a service or component over time. Metrics include properties such as name, value, label, and timestamp, which convey information about service level agreements (SLAs), service level objectives (SLOs), and service level indicators (SLIs).

- Traces: Traces illustrate the complete path of a request or action through a distributed system’s nodes. Traces aid in profiling and monitoring systems, particularly containerized applications, serverless setups, and microservices architectures.

Your systems and applications require proper tools to gather the necessary telemetry data for achieving observability. You can utilize open-source software or a commercial observability solution to create an observable system by developing your own tools. Typically, implementing observability involves four key components: logs, traces, metrics, and events.

- Middleware

- Splunk

- Datadog

- Dynatrace

- Observe, Inc

![Understanding Observability: A Definitive Guide [Updated 2024]](https://wpstage.middleware.io/wp-content/uploads/2022/06/Observability-Definition-benefits-challenges-and-more-copy.jpg)